Introduction

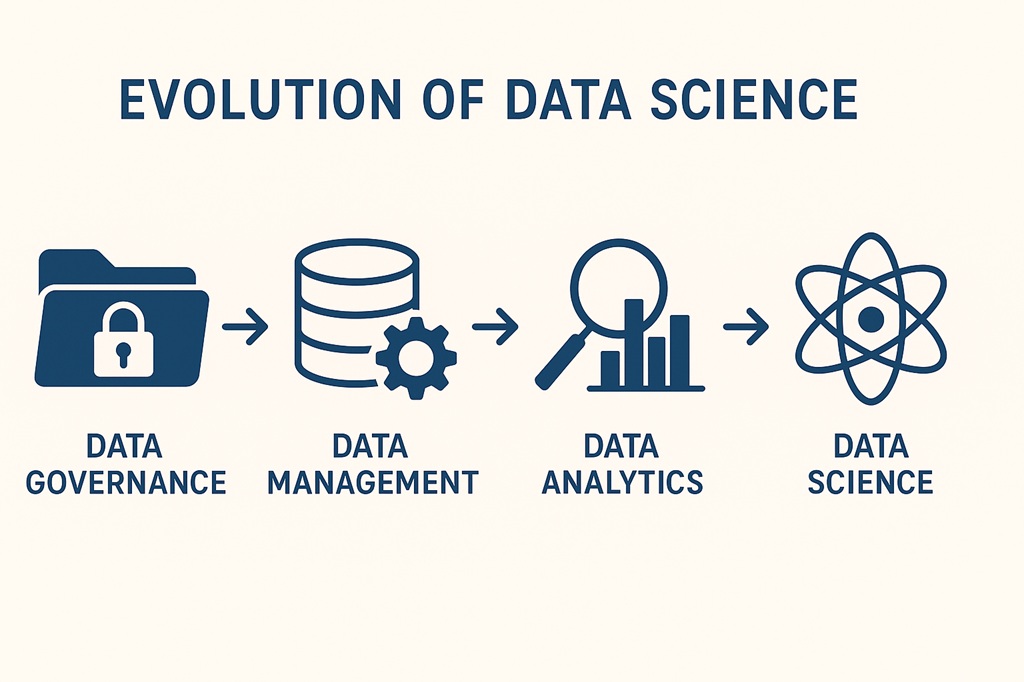

The field of data science, in all its methodological diversity and technological impact, is both a child and a driver of digital transformation across industry, government, and society. Yet, data science’s explosive rise is inextricably linked to a much older—but equally foundational—discipline: data governance. Tracing the evolution from early data governance practices rooted in database management to the data-driven, AI-enhanced enterprises of today reveals not just a technological story, but an evolution in policy, culture, and organizational strategy. The journey from data governance to data science is marked by pivotal moments in regulatory mandates, technological leaps (from relational databases to big data architectures), paradigm shifts (data warehousing, business intelligence, big data, AI), and the ongoing integration of ethical, transparent practices in the age of machine learning and artificial intelligence.

This report offers a chronological and analytical exploration of how data governance originated, matured, and ultimately enabled the emergence of data science. It examines each phase in depth, connecting academic insights, regulatory landmarks, case studies, implementation frameworks, and future trends. Critical themes such as metadata management, regulatory compliance (GDPR, CCPA, HIPAA), data quality, AI explainability, and the security and ethical imperatives of today’s AI-driven world are all addressed. A timeline summarizes key milestones, and case studies further ground the analysis. The discussion concludes with a review of contemporary and emerging governance paradigms—including data mesh, data fabric, and AI/model governance—and their role in shaping the next era of data science.

Origins of Data Governance in Database Management (1960s–1980s)

The origins of data governance lie in the technological advancements and management needs of database systems beginning in the mid-20th century. As the volume and variety of data increased, organizations sought methods to ensure accuracy, consistency, and security. The earliest data management practices focused on the storage, processing, and retrieval of data using magnetic tapes, hierarchical and network databases, and structured file systems.

By the 1960s, the emergence of the first Database Management Systems (DBMS)—notably Charles Bachman’s Integrated Data Store (IDS) and IBM’s Information Management System (IMS)—revolutionized data handling. This period introduced crucial capabilities: independent data storage from application logic, systematic data access protocols, and the first seeds of data quality control and security policies. The advent of the relational database model in the 1970s, proposed by Edgar F. Codd, underscored the need for managing not just data storage but the relationships, integrity, and semantics of data—leading to structured query language (SQL) and the mainstream adoption of robust, scalable database platforms such as Oracle and IBM DB2.

Early data governance, though not yet formalized, manifested itself through the establishment of roles and responsibilities—such as database administrators charged with enforcing access controls, managing data integrity, and maintaining transaction consistency. The imperative for accurate, consistent, and secure data began to take precedence, especially as databases supported increasingly critical business functions, moving from payroll processing to inventory control, and ultimately to online applications such as reservation systems (e.g., SABRE for American Airlines).

A crucial element of this era, often overlooked, was the recognition that poor data management could compromise business operations. Data quality, redundancy elimination, consistency in definitions, and auditability were all fundamental concerns, hinting at what would, in later decades, become formalized data governance.

Formalization of Data Governance as a Discipline (1990s)

The 1990s marked the transition from implicit, IT-driven data management policies to the formalization of data governance as a distinct organizational discipline. The formidable growth of corporate systems, the proliferation of personal computers, and the explosion of data generated by online and transactional systems demanded new strategies for managing and controlling enterprise data.

Two core drivers propelled this formalization:

- Regulatory Pressures: The enactment of foundational laws such as the Health Insurance Portability and Accountability Act (HIPAA, 1996) in the United States and the gradual strengthening of privacy protections in Europe highlighted the need for enterprise-wide policies on data privacy, accuracy, and auditable control. The Sarbanes-Oxley Act (SOX, 2002) later added data integrity and traceability requirements for financial reporting, mandating explicit internal controls.

- Business Risk and Accountability: As organizations recognized data as a valuable asset—and source of risk—the integration of accountability frameworks became critical. Roles such as data stewards, custodians, and governance councils emerged, tasked with defining standards, resolving data ambiguities, and ensuring regulatory compliance across increasingly fragmented IT environments.

Academic and industry discourse began to differentiate “data governance” from generic data management. Data governance evolved to mean the strategic planning, oversight, and control of data assets through policies, standards, and enforcement mechanisms.

Key Milestones:

- The establishment of the Data Governance Institute (DGI, 2002) provided guidelines and best practices for organizational data governance programs.

- The introduction of frameworks such as DAMA International’s Data Management Body of Knowledge (DAMA-DMBOK), which codified the distinct functions of data governance: defining ownership, access, quality, and security; assigning roles; and aligning data policies with business objectives.

Data Governance Frameworks and Standards (2000s)

The early 2000s saw the proliferation of governance frameworks and international standards, reflecting both a maturation of the field and recognition that siloed approaches were insufficient for large, data-rich organizations.

Development of Industry Frameworks

- DAMA-DMBOK: Provided comprehensive best practices for data management and governance, establishing data governance as a formal domain.

- COBIT, CMMI DMMM, ISO/IEC 38505, DCAM, NIST: Expanded the focus to include IT governance, data quality benchmarks, security standards, cloud-specific governance, and more.

Essential Components of Modern Governance

- Clear Policies: Addressing data handling, access, retention, and deletion.

- Defined Roles: Governance councils, stewards, data owners.

- Data Catalogs/Textbooks: Centralizing metadata, lineage, and definitions.

- Lifecycle Management: Ensuring compliance from data creation to archival/destruction.

- Quality Assurance: Defining data quality metrics, validation, and cleansing routines.

- Security and Compliance: Integrating regulatory obligations such as GDPR, CCPA, and HIPAA into everyday operations.

Global Collaboration: International organizations (UN, OECD, G7, G20) began addressing cross-border data flows, data provenance, and digital sovereignty, cementing the global relevance of robust data governance frameworks.

Transition to Analytics: Business Intelligence and Data Warehousing

With data warehousing and business intelligence (BI) architectures emerging in the late 1980s and 1990s, governance priorities expanded from operational integrity to supporting data-driven decision-making.

The Data Warehouse Era (1980s–2000s)

- Data warehouses centralized data from disparate transactional systems, integrating and cleaning records to create a “single version of the truth” for historical analysis.

- ETL (extract, transform, load) processes formalized data movement and standardization between operational systems and warehouses, necessitating new quality controls and enhanced metadata management.

- BI workloads—reporting, dashboards, and analytical queries—began to drive not just demand for data but the need for trust, documentation, and consistency.

During this era, the limitations of ad hoc governance became clear:

- Proliferation of multiple, fragmented data warehouses (silos) revealed new consistency and integration challenges.

- Metadata management, data lineage, and comprehensive access controls emerged as core governance requirements, as inaccuracies or ambiguities could undermine critical business reports.

Master Data Management (MDM) evolved in parallel, seeking to provide agreed-upon definitions and consistent keys for core business entities such as customers, suppliers, and products—further reinforcing the foundational role of governance in analytical environments.

Emergence of Big Data Technologies and Data Lakes

The late 2000s and 2010s ushered in the age of Big Data—a paradigm built on the “4 Vs”: Volume, Velocity, Variety, and Veracity of data.

Technological Shifts:

- NoSQL/Distributed Storage: Platforms like Hadoop, Cassandra, and Spark replaced exclusively relational models, handling petabytes of structured, semi-structured, and unstructured data.

- Data Lakes: Repositories capable of ingesting raw data at scale—often without predefined schemas—accelerated the democratization and complexity of organizational data estates.

Governance Implications:

- Loosened Structure: The flexibility and scale of big data posed serious governance challenges; data lakes often threatened to become “data swamps” without standardized controls.

- Expanded Use Cases: Data scientists and engineers required access to diverse, sometimes sensitive, sources for advanced analytics, AI, and machine learning, increasing risks around data quality, lineage, and security.

- Real-Time and Multi-Modal Data: Streaming data (e.g., IoT sensors, clickstreams, social media) intensified the need for responsive, automated governance solutions.

In response, governance adapted:

- Emphasis on metadata management, automated data classification, and dynamic policy enforcement.

- Recognition of the need for both centralized and decentralized (federated) approaches, as different business units required agility while still adhering to organizational standards.

Evolution of Data Governance Principles in the Big Data Era

As the Big Data era matured, several new governance principles solidified:

- Trust and Transparency: Increased transparency (data lineage, quality checks) and trust-building became critical amid fears of bias, misuse, and data privacy breaches.

- Federated Roles and Domain Ownership: The rise of data mesh architectures promoted distributed stewardship with centrally enforced policies—enabling both autonomy and cohesion.

- Automation and AI-Assisted Governance: As volume and complexity grew, manual governance proved untenable; automation using AI and machine learning to tag, validate, monitor, and remediate data quality and security issues became standard.

- Adaptive, Lifecycle-based Approaches: Governance expanded to cover the entire data lifecycle—from creation to archival or destruction—tuning controls to the varying risks and requirements at each step.

Rise of Data Science as a Distinct Discipline

Concurrent with the big data revolution, data science blossomed into its own field—a blend of statistics, computer science, and domain expertise, dedicated to extracting knowledge and actionable insights from vast and often heterogeneous datasets.

Historical Roots and Evolution:

- Early forerunners such as John Tukey (1962) and Peter Naur (1974) argued for a new science of data analysis that fused computation, statistics, and empirical inquiry.

- The International Association for Statistical Computing (IASC, 1977) formalized the link between statistics and computer technology.

- By the late 1990s and early 2000s, “data scientist” emerged as a profession, denoting practitioners able to manage, analyze, and communicate complex datasets using sophisticated statistical and machine learning methods.

Key characteristics of modern data science:

- Problem Definition and Data Wrangling: Framing questions, sourcing data, and performing extensive data cleaning and preparation.

- Analytical Modeling and Machine Learning: Building, validating, and deploying predictive and inferential models.

- Communicating Insights: Visualizations, dashboards, and stakeholder engagement.

- Collaboration with Data Management/Governance Teams: Ensuring analyses rest on trustworthy, compliant data foundations.

Impact of Governance: Data scientists depend on governed data for reliable, reproducible results. Data quality, metadata, lineage, and regulatory compliance are unavoidable constraints and enablers for success.

Integration of Machine Learning into Data Management Practices

As machine learning (ML) and AI have become ingrained in operational and analytical workflows, so too have their governance implications. The integration of ML into data management has required new forms of collaboration between data engineering, governance, and data science teams.

Key Developments:

- In-Database Analytics: The growth of in-database ML libraries (e.g., Oracle Data Mining, Spark MLlib) has enabled analytics at scale, rooting data science more deeply in enterprise data management systems.

- Lifecycle Management: ML models, like data assets, now require versioning, lineage tracking, validation, performance monitoring, and retirement/refresh controls.

- Automated Data Preparation: AI-driven tools can detect and resolve data quality issues, streamline data wrangling, automate cataloging/tagging, and support regulatory compliance at scale.

Governance Considerations:

- Ensuring that training datasets and features are accurately labeled, free from prohibited data, and compliant with privacy laws.

- Providing explainability and transparency for ML-driven decisions, essential for high-stakes applications such as credit scoring, healthcare, or criminal justice.

- Managing model risk and drift; continuous monitoring and validation are necessary to prevent performance degradation or regulatory violations over time.

Emergence of AI and Its Impact on Data Governance

AI and advanced analytics mark a new frontier in both the opportunity and complexity of data governance. The growing power and ubiquity of AI/ML models, particularly generative AI and deep learning, raise new governance demands.

AI Governance Frameworks:

- AI governance encompasses the end-to-end process of ensuring AI systems are safe, ethical, transparent, and compliant:

- Policy and Standards: Aligning AI development with principles such as fairness, accountability, transparency, and respect for human rights (as exemplified by frameworks from the OECD, UNESCO, EU’s AI Act, and various national and regional bodies).

- Oversight and Accountability: Multidisciplinary boards—often spanning legal, technical, domain, and policy expertise—regularly review and approve AI deployments.

- Risk Management: Identifying, monitoring, and mitigating risks such as bias, discriminatory impact, privacy breaches, security vulnerabilities, and explainability deficits.

- Continuous Monitoring and Explainability: Tools and workflows for ongoing oversight, performance alerts, and audit trails are now essential, especially in regulated or high-impact sectors.

Regulatory Action:

- Comprehensive legislation such as the EU AI Act (2025), GDPR (Europe), and sector-specific U.S. rules (SR 11-7 for financial risk models, HIPAA for health data) codify requirements for model documentation, explainability, fairness, risk management, and data minimization.

- Organizations must ensure that AI/ML models—especially those using personal/sensitive data—are governed with explicit consent, purpose limitation, data subject rights, and robust privacy and security controls.

AI-Specific Governance Practices:

- Model Inventories and Registries: Centralized tracking of all deployed models, with documentation, data sources, performance metrics, and ownership assignments.

- Bias Auditing and Fairness Assessments: Proactive reviews to detect and eliminate discriminatory outcomes.

- Explainability Tools: Enabling decision traceability, counterfactual analysis, and stakeholder communication—vital for legal defensibility and public trust.

Model and AI Governance Frameworks Influencing Data Science

As AI and machine learning became core to business processes, model governance matured as a specialized extension of both data governance and operational risk management.

Key Elements of Model and AI Governance:

- Development and Documentation: Clear model objectives, data selection protocols, and documented design assumptions.

- Validation and Back-Testing: Independent reviews validate models against both historical and current data to ensure robustness, accuracy, and fairness.

- Model Deployment and Continuous Monitoring: Real-time performance tracking, automated alerting on drift or degradation, and scheduled refreshes or retirements.

- Model Inventory and Reporting: Centralized catalogs support regulatory reporting, lifecycle management, and operational integrity.

- Role Assignment and Workflow Management: Clear delineation of responsibilities among developers, risk managers, business units, and auditors—often supported by automated workflow tools.

- Compliance and Regulatory Integration: Alignment with laws (GDPR, HIPAA, CCPA) and emerging AI-specific regulations is now a non-negotiable requirement.

The rapid evolution of AI use cases (e.g., generative text/image models) underscores the dynamic tension between innovation and responsible oversight.

Role of Data Governance in Data Quality and Metadata Management

Metadata as Governance Bedrock: Metadata management has become a linchpin of modern data governance. It underpins discoverability, traceability, data quality, and regulatory compliance.

- Metadata Catalogs: Data catalogs and glossaries standardize definitions, structure, provenance, and relationships between data assets—enabling cross-team data collaboration and minimizing redundancy or ambiguity.

- Active Metadata and Automation: Advances in AI-enabled, active metadata management have made real-time, dynamic governance possible: automating cataloging, classification, and lineage, and enforcing access or masking rules at scale.

- Quality Controls and Auditing: Automated data quality checks—validity, completeness, freshness—are built into every stage of the data lifecycle, with dashboards and alerts supporting ongoing governance and regulatory reporting.

A mature metadata program not only supports internal efficiency and analytics, but also enables compliance audits (e.g., for GDPR, HIPAA, or CCPA), risk mitigation, and accelerated onboarding for new data science/analytics initiatives.

Regulatory Drivers: GDPR, CCPA, HIPAA

- GDPR (EU, 2018): Enshrined data privacy, individual rights, purpose limitation, data minimization, and requirements for explicit consent, data breach notification, and the right to erasure (right to be forgotten).

- CCPA (California, 2020): Strengthened the right of consumers to access, delete, and restrict the sale of personal data. Its broad definition of “personal data” included household identifiers and anonymized records, setting a higher bar for governance, data discovery, data matching, and retention policies.

- HIPAA (US healthcare sector): Outlined strict requirements for the security, privacy, and permitted use of personal health information, mandating safeguards and breach notification.

Governance Implications:

- Enterprise-wide data discovery/auditing of personal data.

- Classification and tagging of sensitive data assets with automated enforcement of access, retention, and deletion policies.

- Consent management and data subject rights enablement via internal governance and external approaches.

- Integration between regulatory requirements and technical workflows in metadata catalogs, data lineage, security controls, and reporting mechanisms.

Businesses failing to meet these requirements not only face legal and financial risks but may suffer reputational harm and erode stakeholder trust.

Timeline of Key Milestones from Governance to Data Science

The following table summarizes key events in the evolution from data governance to data science (with selected regulatory, academic, and technological milestones).

| Year / Period | Milestone |

| 1960s–1970s | Origins of database management (IDS, IMS); data quality and consistency concerns arise |

| 1970 | Relational database model introduced (E.F. Codd) |

| 1977 | IASC established to connect statistics and computing |

| 1980s | Emergence of data warehouses and BI; initial policy-driven approaches to governance |

| Early 1990s | Formalization of data governance as a discipline; role of stewards, policies, councils |

| 1996 | HIPAA enacted, demanding data privacy and security in US healthcare |

| 2001 | Cleveland proposes “Data Science” as a discipline |

| 2002 | Sarbanes-Oxley mandates controls, traceability, auditability for finance; DGI founded |

| Early 2000s | DAMA-DMBOK and other governance frameworks take hold |

| Mid-2000s | Emergence of big data, cloud, NoSQL; data lakes appear |

| 2010s | Advanced analytics, machine learning, and AI integration; rise of data mesh/fabric |

| 2017–2018 | GDPR, CCPA raise the bar for enterprise data governance and privacy |

| Late 2010s | AI and model governance frameworks emerge to address risk, fairness, explainability |

| 2020s | Responsible/ethical AI, explainability, automated and federated governance |

| 2024–2025 | EU AI Act, Digital Markets Act; maturity of data mesh, AI, and model governance |

Sources: [7][14][15][21][26][27][36][22][23][32][33][42][43]

Academic Literature Mapping the Evolution of Data Governance to Data Science

Academic research has mirrored and sometimes anticipated these developments:

- Data governance literature (2007–2024): Systematic reviews reveal surging interest, with 3,500+ documents and 9,000+ researchers spanning compliance, privacy, stewardship, quality, and AI impact.

- Evolution of Key Themes: Early themes (2007–2017): compliance, stewardship, privacy. Expansion (2018–2020): privacy, health, smart systems. Latest focus (2021–2024): AI, global governance, ethics, and international frameworks.

- Key Academic Contributions: William S. Cleveland (2001, “Data Science: An Action Plan…”), Fayyad et al. (“From Data Mining to Knowledge Discovery in Databases”), and many others bridged governance, modeling, and scientific inquiry.

- Institutional Hubs: Universities such as Oxford, Toronto, Edinburgh, Melbourne, KU Leuven, and State Grid Corp of China lead with prolific publication and practical research integration.

Case Studies of Governance Principles Applied in Data Science

1. Airbnb: Data Literacy and Democratization

Airbnb, recognizing that effective data governance depends on a data-literate workforce, launched “Data University”—an internal initiative to empower employees with data understanding, driving responsible, widespread data use and governance adherence.

2. GE Aviation: Centralized Self-Service Data Management

GE Aviation created a Self-Service Data (SSD) team for user enablement, paired with a Database Admin team for compliance and data governance. Their process involves server-side checks, documentation, and automation—streamlining not just access but policy compliance and operational safety.

3. Wells Fargo: Single Source of Truth

Aiming to reduce data silos, Wells Fargo built a single source of truth using centralized data management and visualization tools (e.g., Tableau) for accessibility and governance. The outcome: more accurate reporting, reduced business risk, and improved stakeholder trust.

4. Uber: Federated, Country-Specific Governance

Uber’s global presence obliges compliance with myriad local data laws. The solution: a central platform for privacy/security, layered with region-custom plugins and customized workflows, supported by continuous training—a showcase of federated governance in a multinational context.

5. Tide: GDPR-Driven Automation

UK’s Tide digital bank transformed Right to Erasure compliance with an automated metadata framework, reducing PII-tagging burdens from 50 days to mere hours and providing rapid regulatory responsiveness.

Influence of Ethical and Responsible AI Principles on Data Science Governance

Responsible AI principles are shaping data science governance in crucial ways:

- Bias and Fairness: AI systems must be designed and continuously audited to minimize systemic biases and discriminatory outcomes, aligning with legal and societal expectations.

- Transparency and Explainability: As models affect more consequential decisions, the ability to explain AI logic to regulators, users, and affected parties becomes a governance imperative.

- Privacy and Data Protection: AI/ML models must respect privacy by default—supporting data minimization, anonymization, and consent protocols.

- Accountability: Organizations must document decision rights and review boards to ensure AI/ML models don’t “run wild” outside control or cause unanticipated harm. Regulatory frameworks increasingly codify these requirements (e.g., EU AI Act’s risk tiers).

- Lifecycle Governance: Continuous monitoring, validation, retraining, and retirements of models, with audit logs and traceable lineage, are now standard.

UNESCO’s global AI ethics and governance frameworks recommend not only technical controls but also cultural and institutional strategies for aligning AI with human rights, sustainability, inclusion, and democratic values.

Future Trends: Data Mesh, Data Fabric, and Governance in AI-Driven Data Science

Data Mesh Governance

A response to the limitations of both wholly centralized and wholly fragmented approaches, data mesh governance distributes ownership of data to domain teams, while enforcing central policies for interoperability, quality, cataloging, and access control. Flexible yet robust, data mesh supports scalable, domain-driven data products, automated policy enforcement, and agile adaptation to business needs.

Key Features:

- Federated/domain-level stewardship.

- Modular, policy-automated infrastructure.

- Discoverable, documented, interoperable data products.

- Centralized policy-setting; decentralized execution.

Data Fabric

Data fabric connects and standardizes governance, access, and integration across silos—on-premises, in cloud, or at the edge. It emphasizes real-time metadata management, active lineage tracking, and AI-powered automation of compliance and lifecycle controls.

Active and Adaptive AI/ML Governance

As models become ever more ubiquitous, governance is growing adaptive. Real-time monitoring of bias, drift, and regulatory changes is being embedded into model management platforms. Explainability, auditing, and automated remediation of ethical or legal breaches are priorities.

Emerging best practices:

- Automated, AI-enhanced policy enforcement.

- Risk-based, proportional governance (aligning oversight intensity with model impact).

- Interoperability protocols and global standards for cross-border, cross-enterprise, and open data ecosystems.

- “Governance platforms as a service” (Atlan, Informatica, Collibra, etc.) integrating end-to-end lifecycle safeguards.

Conclusion: The Governance-Driven Future of Data Science

The evolution from data governance to data science is neither a simple progression nor an obsolescence of one by the other. Instead, data governance has matured from a back-office IT function to a mission-critical, organization-wide discipline—that enables, empowers, and constrains data science, analytics, and AI at every level. Modern data science—whether in business, government, or research—cannot exist without strong, adaptive, and ethical data governance.

In the coming decade, governance will continue to inform both the operational mechanics (metadata, lineage, validation, monitoring) and the cultural ethos (responsibility, fairness, transparency, and trust) of data-driven practice. As AI, ML, and automation advance, the most successful data science efforts will be those deeply rooted in rigorous, inclusive, future-adaptive governance.

Businesses, policymakers, and researchers are advised: invest not just in the latest analytics, but in the frameworks, standards, and cross-disciplinary stewardship that ensure data science remains a force for insight, equity, and innovation.

Timeline Table: Key Milestones from Data Governance to Data Science

| Year / Period | Key Milestone |

| 1960s–1970s | Inception of database management (IDS, IMS), emergence of basic governance principles |

| 1970 | Relational database model (E.F. Codd), SQL |

| 1977 | Creation of IASC, first step to merge statistics and computing |

| 1980s | Widespread data warehousing and BI, growth of policy-driven management, MDM roots |

| Early 1990s | Regulatory pressures and formalization of data governance as an organizational function |

| 1996 | HIPAA (US), privacy and security in healthcare |

| 2001 | “Data Science” defined as a discipline (Cleveland), tech/academic debate emerges |

| Early 2000s | Proliferation of governance frameworks (DAMA, DGI, DMBOK) |

| 2007 | First academic publications using “data governance” |

| 2010s | Rise of big data, NoSQL, data lakes, machine learning integrated in mainstream analytics |

| 2018 | GDPR and CCPA define global standards for privacy, data rights, consent |

| Late 2010s | Rapid deployment of AI/ML; explainability, fairness, model risk threaten status quo of governance |

| 2020s | Model & AI governance frameworks mature; EU AI Act; data mesh and fabric become commonplace |

| 2024–2025 | Real-time, automated, dynamic governance—governing not just data, but also models and algorithms |

Key Takeaway: The journey from data governance to data science reflects not merely a shift in technology, but a profound evolution of how organisations, governments, and societies understand, harness, and safeguard the value of data. Robust, adaptive, and ethically anchored governance remains the bedrock on which the next generation of data science must stand. Great! I’m diving into a detailed article that traces the evolution of data science from its roots in data governance. I’ll explore the historical phases, key milestones, and how governance principles continue to shape modern data science. This will take a little time, so feel free to step away—I’ll keep working in the background and save the article right here in our conversation. Looking forward to sharing it with you soon!

No responses yet